How to Fine-tune GPT-4o mini: Step-by-Step Guide

Learn how to fine-tune GPT-4o mini to improve its performance for custom use cases.

DATE

Tue Sep 17 2024

AUTHOR

Felix Wunderlich

CATEGORY

Guide

Fine-Tuning GPT-4o mini For Better Performance

Fine-tuning GPT-4o mini can significantly improve its performance for specialized tasks. This process involves training the model on a dataset of input-output pairs. The dataset includes inputs, which are the prompts given to the model, and outputs, which are the ideal responses. By carefully crafting these pairs, you ensure the model produces outputs that align with your desired style, tone, and accuracy.

In this guide, we’ll take you through the steps to fine-tune GPT-4o mini and show you how to optimize its performance for different use cases.

Key Takeaways

- Creating a Dataset: Learn how to fine-tune GPT-4o mini by building a dataset for your specific use case, to train the model on high-quality, relevant examples.

- Fine-Tuning Process: Step-by-step instructions to fine-tune GPT-4o mini to improve model performance and deliver more accurate outputs.

- Handling Common Issues: Discover strategies to address issues like inconsistent tone, incomplete information, and formatting errors in the fine-tuning process.

Common Issues with GPT-4o mini

- Inconsistent Tone: Maintaining a consistent tone and style can be difficult without fine-tuning, leading to outputs that vary in quality.

- Incomplete Information: Important details related to the task at hand may be missing or inaccurately represented in the output.

- Formatting Errors: Standard models like GPT-4o mini often produce outputs with inconsistent or incorrect formatting, making it harder to use the content directly.

By fine-tuning GPT-4o mini, you can address these issues and ensure high-quality, consistent, and reliable outputs for your specific use case.

E-Commerce Fine-Tuning Use Case For Product Descriptions

Let’s take an example from a women’s fashion brand, Ocean Breeze, which specializes in swimwear. The goal is to generate accurate, consistent, and polished product descriptions that resonate with customers and highlight product features.

Here’s a typical raw product description for a swimsuit:

Raw Product Data

- Product Name: One-Shoulder Swimsuit

- Material Composition: Outer Layer: 80% Recycled Nylon, 20% Elastane; Lining: 100% Polyester; 65% of the product weight is made from recycled materials

- Size Options: Small, Medium, Large

- Model Information: Model is 170cm/5’7” tall and wears a size Small

- Design Features: One-shoulder strap, Cut-out detailing at the waist, High-cut legs

- Color: Midnight Blue

- Intended Use: Swimming

- Care Instructions: Hand wash cold, Do not bleach, Lay flat to dry, Do not iron

- Sustainability Information: Made from 65% recycled materials, primarily from discarded fishing nets and recycled fabrics. Excludes smaller components like stitching and fastenings.

Desired Output Description

The Desired Output Description is the polished product description that the AI model should generate based on the provided raw product data. This output could be used directly on a website or e-commerce platform, where the content is accurate, engaging, and optimized for customer readability.

Description:

Make waves with the Tie-Dye Halter Bikini from the Ocean Breeze Tropical Collection. This vibrant blue tie-dye bikini features a halter tie top with an adjustable back for a perfect fit, while the low-rise bottoms offer a flattering silhouette with moderate coverage. Fully lined for comfort, this bikini is perfect for your next beach day.

Art.nr: OB556677

Model size: The model is 173cm/5’8” tall and wears a size M

Sleeve length: Sleeveless

Model: Halter tie top, Adjustable back tie, Low-rise bottoms

Neckline: Halter

Sports activity: Swimming

Description: Blue Tie-Dye, Multicolor

Concept: Ocean Breeze Tropical Collection

Worth knowing: Lined

Material:

Outer layers: Recycled Polyester 79%, Elastane 21%

Inner lining: Polyester 100%

Additional material information:

The total weight of the product consists of 67% recycled materials, sourced from repurposed plastic bottles and used textiles. The weight of smaller components like trims and stitching is excluded from this calculation. For sets and multipacks, all parts are calculated together as one product.

Explanation of the materials in this product:

Recycled polyester: Made from repurposed plastic bottles and used textiles, offering durability and sustainability.

Elastane: A synthetic fiber providing flexibility and stretch, derived from fossil fuels.

Polyester: A durable synthetic fiber produced from crude oil.

Care advice: Support a more sustainable fashion industry by recycling your old swimwear at Ocean Breeze stores.

Care advice:

Hand wash cold

Do not bleach

Do not tumble dry

Line dry

Fine-tuning on these kinds of input-output pairs trains the model to replicate the desired tone, detail, and structure seen in the output, ensuring consistency and accuracy across all generated product descriptions.

Creating a Fine-Tuning Dataset for E-Commerce Descriptions

Define Your Objective

The first step in fine-tuning GPT-4o mini is clearly defining the objective.

In this case, we want to continue with the above example and generate product descriptions that are:

- Concise and polished in layout.

- Consistent with the brand tone.

- Factual and accurate, including all necessary product details.

Collect and Organize Data

Start by collecting product data that you want the model to learn from. For Ocean Breeze, they likely already have a collection of existing product descriptions that can be used as a foundation. These descriptions should include detailed product information, such as features, material composition, and sustainability details.

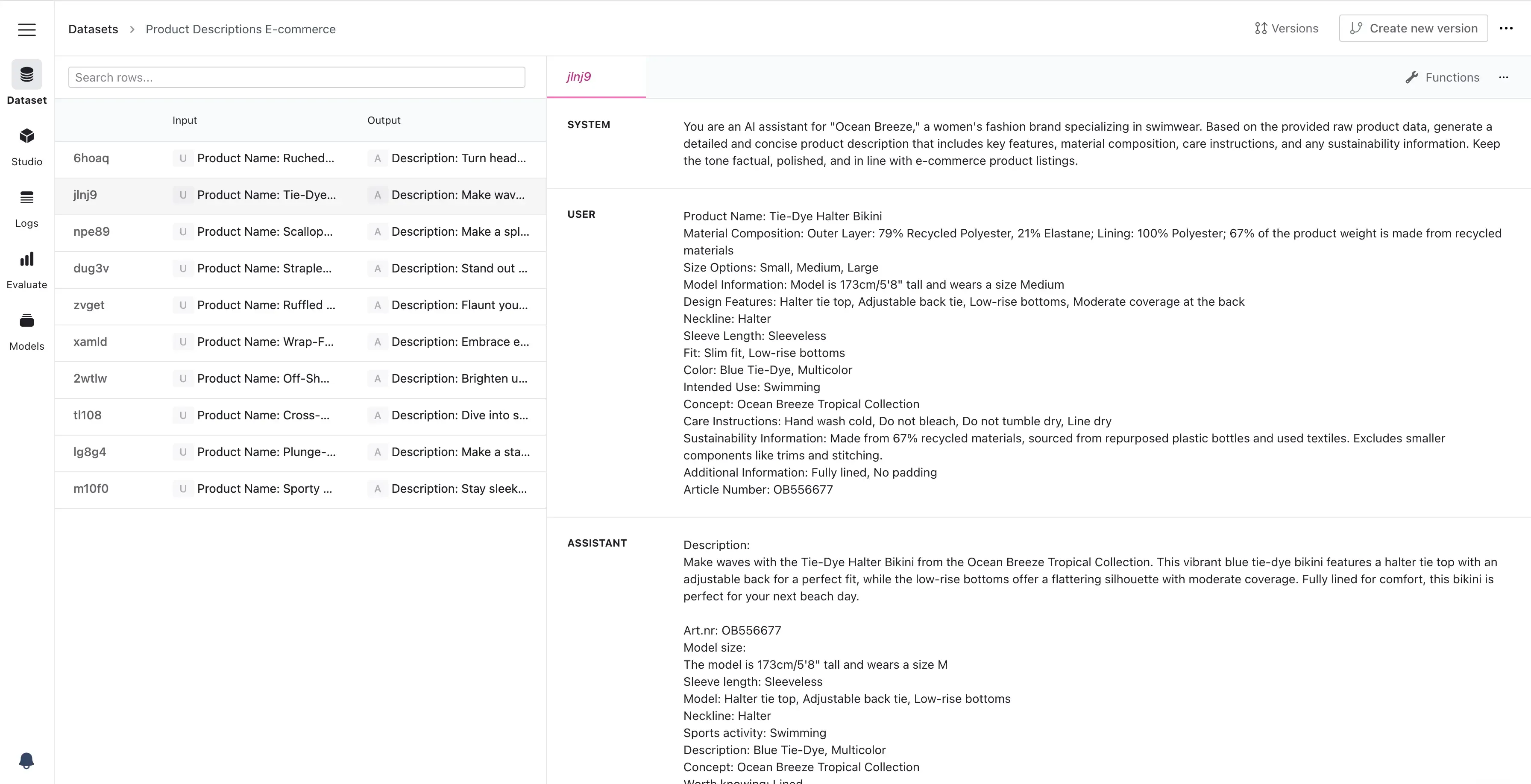

Example Dataset Entry for Fine-Tuning:

System

You are an AI assistant for “Ocean Breeze,” a women’s fashion brand specializing in swimwear. Based on the provided raw product data, generate a detailed and concise product description that includes key features, material composition, care instructions, and any sustainability information. Keep the tone factual, polished, and in line with e-commerce product listings.

User (Input)

Product Name: Tie-Dye Halter Bikini

Material Composition: Outer Layer: 79% Recycled Polyester, 21% Elastane; Lining: 100% Polyester; 67% of the product weight is made from recycled materials

Size Options: Small, Medium, Large

Model Information: Model is 173cm/5’8” tall and wears a size Medium

Design Features: Halter tie top, Adjustable back tie, Low-rise bottoms, Moderate coverage at the back

Neckline: Halter

Sleeve Length: Sleeveless

Fit: Slim fit, Low-rise bottoms

Color: Blue Tie-Dye, Multicolor

Intended Use: Swimming

Concept: Ocean Breeze Tropical Collection

Care Instructions: Hand wash cold, Do not bleach, Do not tumble dry, Line dry

Sustainability Information: Made from 67% recycled materials, sourced from repurposed plastic bottles and used textiles. Excludes smaller components like trims and stitching.

Additional Information: Fully lined, No padding

Article Number: OB556677

Assistant (Expected Model Output)

Description:

Make waves with the Tie-Dye Halter Bikini from the Ocean Breeze Tropical Collection. This vibrant blue tie-dye bikini features a halter tie top with an adjustable back for a perfect fit, while the low-rise bottoms offer a flattering silhouette with moderate coverage. Fully lined for comfort, this bikini is perfect for your next beach day.

Art.nr: OB556677

Model size: The model is 173cm/5’8” tall and wears a size

M

Sleeve length: Sleeveless

Model: Halter tie top, Adjustable back tie, Low-rise bottoms

Neckline: Halter

Sports activity: Swimming

Description: Blue Tie-Dye, Multicolor

Concept: Ocean Breeze Tropical Collection

Worth knowing: Lined

Material:

Outer layers: Recycled Polyester 79%, Elastane 21%

Inner lining: Polyester 100%

Additional material information:

The total weight of the product consists of 67% recycled materials, sourced from repurposed plastic bottles and used textiles. The weight of smaller components like trims and stitching is excluded from this calculation. For sets and multipacks, all parts are calculated together as one product.

Explanation of the materials in this product:

Recycled polyester: Made from repurposed plastic bottles and used textiles, offering durability and sustainability.

Elastane: A synthetic fiber providing flexibility and stretch, derived from fossil fuels.

Polyester: A durable synthetic fiber produced from crude oil.

Care advice:

Support a more sustainable fashion industry by recycling your old swimwear at Ocean Breeze stores.

Care advice:

Hand wash cold

Do not bleach

Do not tumble dry

Line dry

Structuring the Dataset

The dataset must follow the OpenAI structured format in JSONL to effectively fine-tune GPT-4o mini, with each example consisting of a prompt (system), a product’s raw data (User), and the desired AI model answer (Assistant).

Here’s the above example dataset entry in JSONL format that could be used for fine-tuning GPT-4o mini for product descriptions:

{

"messages": [

{

"role": "system",

"content": "You are an AI assistant for 'Ocean Breeze,' a women's fashion brand specializing in swimwear. Based on the provided raw product data, generate a detailed and concise product description that includes key features, material composition, care instructions, and any sustainability information. Keep the tone factual, polished, and in line with e-commerce product listings."

},

{

"role": "user",

"content": "Product Name: Tie-Dye Halter Bikini\nMaterial Composition: Outer Layer: 79% Recycled Polyester, 21% Elastane; Lining: 100% Polyester; 67% of the product weight is made from recycled materials\nSize Options: Small, Medium, Large\nModel Information: Model is 173cm/5'8\" tall and wears a size Medium\nDesign Features: Halter tie top, Adjustable back tie, Low-rise bottoms, Moderate coverage at the back\nNeckline: Halter\nSleeve Length: Sleeveless\nFit: Slim fit, Low-rise bottoms\nColor: Blue Tie-Dye, Multicolor\nIntended Use: Swimming\nConcept: Ocean Breeze Tropical Collection\nCare Instructions: Hand wash cold, Do not bleach, Do not tumble dry, Line dry\nSustainability Information: Made from 67% recycled materials, sourced from repurposed plastic bottles and used textiles. Excludes smaller components like trims and stitching.\nAdditional Information: Fully lined, No padding\nArticle Number: OB556677"

},

{

"role": "assistant",

"content": "Make waves with the Tie-Dye Halter Bikini from the Ocean Breeze Tropical Collection. This vibrant blue tie-dye bikini features a halter tie top with an adjustable back for a perfect fit, while the low-rise bottoms offer a flattering silhouette with moderate coverage. Fully lined for comfort, this bikini is perfect for your next beach day.\n\nArt.nr: OB556677\nModel size: The model is 173cm/5'8\" tall and wears a size M\nSleeve length: Sleeveless\nModel: Halter tie top, Adjustable back tie, Low-rise bottoms\nNeckline: Halter\nSports activity: Swimming\nDescription: Blue Tie-Dye, Multicolor\nConcept: Ocean Breeze Tropical Collection\nWorth knowing: Lined\n\nMaterial:\nOuter layers: Recycled Polyester 79%, Elastane 21%\nInner lining: Polyester 100%\n\nAdditional material information: The total weight of the product consists of 67% recycled materials, sourced from repurposed plastic bottles and used textiles. The weight of smaller components like trims and stitching is excluded from this calculation. For sets and multipacks, all parts are calculated together as one product.\n\nExplanation of the materials in this product:\nRecycled polyester: Made from repurposed plastic bottles and used textiles, offering durability and sustainability.\nElastane: A synthetic fiber providing flexibility and stretch, derived from fossil fuels.\nPolyester: A durable synthetic fiber produced from crude oil.\n\nCare advice:\nSupport a more sustainable fashion industry by recycling your old swimwear at Ocean Breeze stores.\n\nCare advice:\nHand wash cold\nDo not bleach\nDo not tumble dry\nLine dry"

}

]

}Fine-Tuning GPT-4o mini for Product Descriptions

Follow this step-by-step guide to fine-tune GPT-4o Mini for creating consistent, accurate, and brand-aligned product descriptions based on your specific catalog data.

Prepare the Dataset

Ensure your product descriptions dataset is formatted as JSONL, with prompt and completion pairs.

Selecting the Base Model

Start by selecting GPT-4o mini as your base model. It’s efficient and compact, making it ideal for fine-tuning on specific tasks like generating product descriptions for a specialized domain such as e-commerce.

Set Training Parameters

To fine-tune GPT-4o mini effectively, it involves setting key training parameters such as learning rate and batch size. It’s recommended to start with OpenAI’s default settings and adjust only if needed. Here’s what each parameter affects:

- Epochs: The number of epochs to train the model for. An epoch refers to one full cycle through the training dataset.

- Batch Size: Number of examples in each batch. A larger batch size means that model parameters are updated less frequently, but with lower variance.

- Learning Rate Multiplier: Scaling factor for the learning rate. A smaller learning rate may be useful to avoid overfitting.

Start with the default settings (auto) and adjust only if the output or model performance isn’t meeting expectations.

Start the Fine-Tuning Process

Initiate fine-tuning and monitor the training progress. Ensure the loss decreases steadily without overfitting.

Test the Fine-tuned Model

After training, test your fine-tuned GPT-4o mini model on new product descriptions to verify the quality of the outputs. It’s important to evaluate how well GPT-4o mini handles common issues such as:

- Consistency in Tone: Ensure the descriptions align with the brand voice by testing across various products.

- Comprehensive Details: Verify that key product information, such as material composition and care instructions, are not missed.

- Avoiding Repetitiveness: Make sure the model doesn’t generate overly formulaic or repetitive text, especially for similar products.

Additionally, while testing, consider adjusting the following sampling parameters to improve the output quality:

- Temperature: Controls the randomness of the model’s outputs. A lower temperature (closer to 0) will make the model more deterministic, sticking closely to learned patterns, while a higher temperature (1) will introduce more diversity and creativity into the responses.

- Frequency Penalty: Reduces the likelihood of the model repeating the same phrases or words by penalizing frequent terms. This can be useful for preventing repetitive descriptions across similar products.

These settings help fine-tune the balance between creativity and consistency in the generated text.

Consistency in Input Format for Fine-Tuned Models

For a fine-tuned model like GPT-4o mini to generate accurate and polished product descriptions, it’s essential that the input follows a consistent format. This consistency allows the model to recognize the structure of the request and produce reliable outputs that match the required style and content.

In the case of fine-tuning for Ocean Breeze swimwear product descriptions, the input should always include:

- Product Name (e.g., Tie-Dye Halter Bikini)

- Material Composition (e.g., 79% Recycled Polyester, 21% Elastane; Lining: 100% Polyester)

- Design Features (e.g., halter tie top, low-rise bottoms)

- Size and Fit Details (e.g., size Medium, slim fit)

- Care Instructions (e.g., hand wash cold, do not bleach, line dry)

- Sustainability Information (if applicable, e.g., 67% recycled materials from plastic bottles)

By adhering to a predictable format, the fine-tuned model learns how to handle each aspect of the request effectively, ensuring that the output is both accurate and relevant to your e-commerce needs.

Deploying the Fine-Tuned Model

Once your GPT-4o mini model is fine-tuned and tested, deploying it is straightforward. You can deploy the model directly via the OpenAI platform. Simply choose the newly fine-tuned model within the OpenAI interface or API, just like selecting any other pre-trained model. When deployed, the model can produce outputs in real-time, tailored to your specific needs.

Post-Deployment Considerations

After deployment, it’s important to monitor the model’s performance in real-time applications. Gather feedback from users and analyze production logs to track how well the model handles various inputs. Monitoring helps you identify any areas where the model may need further refinement or retraining. Regularly updating your dataset and retraining the model based on real-world interactions ensures that the model continues to perform optimally as your business needs evolve.

Fine-Tuning for Custom Use Cases

Fine-tuning GPT-4o mini for custom use cases across industries demonstrates the power of adapting large language models to specific business needs. No matter the domain, fine-tuning allows businesses to tailor the model to meet specific needs by training it on relevant datasets.

By working with domain experts to curate high-quality datasets, consistently structuring input data, and incorporating relevant industry-specific details, you can fine-tune models to deliver precise and relevant outputs.

Streamlining the Fine-Tuning Process with FinetuneDB

To make the fine-tuning and deployment process more efficient, FinetuneDB offers tools to simplify dataset creation, model training, and deployment.

Fine-tuning Dataset Editor

With FinetuneDB, you can easily create, upload, and manage datasets. The platform’s built-in editor allows for seamless collaboration and version control, ensuring datasets can be refined over time.

After dataset creation, fine-tuning is simplified through an intuitive interface where you can select pre-trained models, set training parameters, and monitor progress in real-time. Once the model is ready, deployment is just as easy, allowing you to integrate the fine-tuned model into production with minimal effort.

FinetuneDB also supports continuous improvement by tracking real-world interactions, allowing you to refine your model and datasets as your use case evolves.

Sign up at FinetuneDB, or contact us for a personalized demo and see how we can help you streamline your fine-tuning operations.

Frequently Asked Questions (FAQ)

1. What is GPT-4o mini, and why should businesses consider fine-tuning it?

GPT-4o mini is a compact version of GPT-4o, designed for efficiency and flexibility. Fine-tuning GPT-4o mini allows businesses to adapt the model for specific tasks such as customer service, content creation, and data analysis, resulting in more accurate and task-specific outputs that align with the company’s objectives.

2. How does fine-tuning GPT-4o mini benefit my business?

Fine-tuning customizes GPT-4o mini to your specific needs, whether it’s generating content, handling customer queries, or automating repetitive tasks. This leads to improved accuracy, a better user experience, and more consistent outputs aligned with your brand voice and operational requirements.

3. What kind of data do I need to fine-tune GPT-4o mini?

You need a dataset with real-world examples of your business use case to help the model generate specific, relevant outputs. This dataset includes input-output pairs related to your specific task, such as customer inquiries and responses, product descriptions, or technical documentation.

4. What are the common challenges with using standard models, and how can fine-tuning help?

Standard models may produce outputs with inconsistent tone, incomplete information, or formatting errors. Fine-tuning GPT-4o mini on your specific dataset helps address these issues, ensuring the model generates content that is aligned with your business’s style and needs.

5. How long does it take to fine-tune GPT-4o mini?

The time it takes to fine-tune GPT-4o mini depends on factors such as the size of the dataset, the complexity of the task, and the training parameters. While small tasks may take a few minutes, more complex fine-tuning efforts can take hours.

6. What are the key parameters to consider when fine-tuning GPT-4o mini?

Key parameters include:

- Epochs: The number of times the model goes through the entire dataset during training.

- Batch Size: How many examples are processed at once.

- Learning Rate Multiplier: A factor that adjusts how quickly the model updates its parameters. Starting with default settings is recommended, and adjustments can be made based on performance.

7. How can I test the performance of my fine-tuned model?

After fine-tuning, you can test the model by running it on real-world data and evaluating its performance based on key metrics, such as tone consistency, accuracy, and completeness of information. Adjust settings like temperature and frequency penalty to refine the output further.

8. Can fine-tuning GPT-4o mini be applied to industries beyond e-commerce?

Yes, fine-tuning GPT-4o mini can be applied across various industries, including healthcare, finance, legal services, and more. By training the model on domain-specific datasets, businesses can leverage GPT-4o mini for customized applications like report generation, customer support, and data analysis.

9. What tools or platforms can help streamline the fine-tuning process?

Platforms like FinetuneDB simplify the fine-tuning process by offering intuitive tools for dataset creation, model training, and deployment. These platforms often include features like real-time monitoring and collaboration tools, making it easier to manage and refine your fine-tuned models.

10. How can I deploy a fine-tuned GPT-4o mini model?

After fine-tuning, deploying GPT-4o mini is straightforward. You can use the OpenAI platform or an API to integrate the fine-tuned model into your business systems, whether for customer service, automation, or content generation. The deployment process is typically as simple as selecting the fine-tuned model and using it in real-time applications.

11. Is fine-tuning GPT-4o mini cost-effective for businesses?

Yes, fine-tuning GPT-4o mini is typically more cost-effective than training a model from scratch. It allows businesses to leverage a pre-trained model and adapt it for specific tasks, reducing both time and resource investment while improving the model’s relevance to your business use case.