Qlerify Improves LLM Accuracy with Fine-tuning Using FinetuneDB

Qlerify background

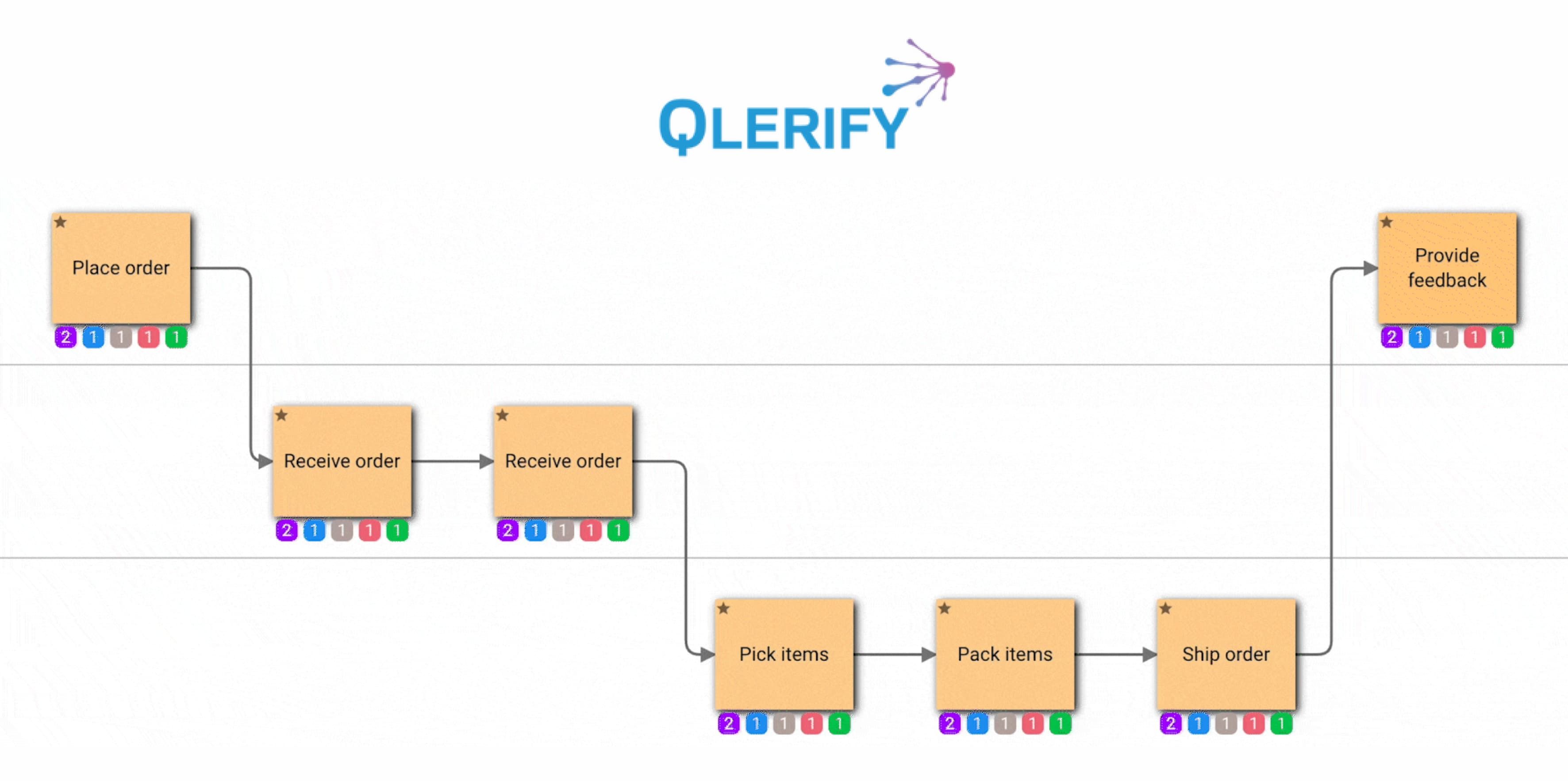

Qlerify is an AI-powered software design tool helping digital transformation teams accelerate digitization of enterprise business processes. They made significant improvements in model output quality and speed, while cutting costs with fine-tuning by using the FinetuneDB platform.

Qlerify business processes workflows

PROBLEM

Qlerify’s AI output was inconsistent over time and the quality for some business processes was not good enough

Qlerify combines a collaborative workspace with AI auto-generation of content, process modeling, data modeling, requirements gathering, and backlog management.

There were key challenges for the AI-generated content workflow:

- The model output for certain prompts was not stable and consistent over time.

- The model output quality for several business processes was insufficient. The model needs to understand and replicate the complex requirements of sectors as diverse as technology, healthcare, and finance.

- Sometimes AI auto-generation of content was very slow.

They had all the industry-specific data gathered, but needed an easy way to transform these into fine-tuning datasets. At the same time, they were looking for a workflow to evaluate the LLM outputs and test different fine-tuned versions against each other.

Nikolaus Varzakakos

Chief Operating Officer at Qlerify

“The goal for our AI model is to produce accurate, industry-tailored outputs in JSON format, to integrate the outputs into our visual workflow tool.”

SOLUTION

Creating Custom Datasets with FinetuneDB’s Dataset Manager and Leveraging Evaluation Workflows

Using the FinetuneDB platform, Qlerify was able to easily fine-tune their LLM, enhancing output quality, speed, and reducing costs.

- With FinetuneDB’s no-code environment, the Qlerify team was able to use a non-technical domain expert to collect the industry-specific data and transform it into datasets ready for fine-tuning.

- Qlerify’s AI model is powered by OpenAI, which FinetuneDB has a deep integration with that simplifies both the fine-tuning and model management.

- Once fine-tuning was completed, the domain expert was able to leverage FinetuneDB’s evaluation workflow to ensure that outputs were correct.

- Finally, in the prompt studio environment, they were able to test the base model against the fine-tuned model to conclude the testing.

RESULT

FinetuneDB enabled Qlerify to create a fine-tuned AI model that is 3x cheaper, and up to 10x faster, with better performance.

The collaboration with FinetuneDB led to significant improvements in the quality and stability of Qlerify’s LLM outputs. While initially Qlerify used GPT-4 with volatile results, they now have their custom fine-tuned GPT-3.5 “Qlerify” model in production, that is up to 10x faster, 3x cheaper with even better performance.

Nikolaus Varzakakos

Chief Operating Officer at Qlerify

“FinetuneDB simplified the fine-tuning process significantly. We look forward to developing our AI model further with their intuitive frameworks.”

Qlerify factsheet