Fine-tuning vs. RAG: Understanding the Difference

Learn the key differences between Fine-tuning and RAG (Retrieval-Augmented Generation). Learn best practices for choosing the right strategy to optimize your AI model's performance.

DATE

Tue Aug 20 2024

AUTHOR

Felix Wunderlich

CATEGORY

Guide

What is the Difference Between Fine-Tuning and RAG?

Fine-tuning improves a pre-trained model by training it on a specific, task-focused dataset, adjusting the model’s internal settings to better handle specialized tasks. RAG (Retrieval-Augmented Generation) enhances a model’s responses by adding real-time information from external databases or knowledge sources.

Fine-tuning is best for tasks that require consistent performance within a specific domain, while RAG is ideal for providing the most up-to-date, relevant information. These approaches often work together, combining the strengths of both methods to boost AI performance.

Key Takeaways

- Understand the Core Differences: Learn what distinguishes Fine-tuning from RAG in terms of function and application.

- When to Use Fine-tuning: Explore scenarios where Fine-tuning is the preferred strategy.

- When to Use RAG: Discover use cases where RAG can significantly improve AI performance.

- Best Practices: Get actionable advice on implementing these strategies effectively.

- Choosing the Right Approach: Determine which method aligns with your goals.

Fine-tuning vs. RAG

What is Fine-tuning?

Fine-tuning involves taking a pre-trained model and making it more suited to a specific task or domain by training it further with a smaller dataset. With LoRa (Low-Rank Adaptation), a popular method for fine-tuning, the process is made more efficient and cost-effective. Instead of tweaking all of the model’s parameters, LoRa focuses on adjusting only a small, essential subset. This approach often allows fine-tuning to be effective even with a targeted dataset size as small as 10-100 examples. This means you can achieve great results without needing massive computational resources. It’s a great choice for specialized tasks like writing product descriptions in a brand’s unique voice or extracting specific data where precision is key.

Best Practices for Fine-tuning

- Start with a Clear Objective: Define the specific task or domain you want your model to excel in. This clarity will guide the dataset creation and fine-tuning process.

- Curate High-Quality Data: Use a well-curated dataset that reflects the specific scenarios your model will encounter. Quality over quantity is key here.

- Monitor for Overfitting: Fine-tuning can lead to overfitting, where the model performs well on the training data but poorly on new data. Regularly validate the model’s performance on unseen data to prevent this.

- Iterate and Improve: Fine-tuning is an iterative process. Continuously refine the model by updating the dataset and re-tuning as needed.

What is RAG?

Retrieval-Augmented Generation (RAG) is a method that combines generative AI with retrieval-based methods. Instead of relying solely on the model’s internal knowledge, RAG enables the model to query external databases or documents to retrieve relevant information in real-time. This approach is particularly useful when the model needs to generate responses that are factually accurate and up-to-date.

Best Practices for RAG

- Leverage Rich, Up-to-Date Sources: Ensure that the external knowledge base is comprehensive and regularly updated. This ensures the model retrieves accurate and relevant information.

- Optimize Retrieval Strategies: Fine-tune the retrieval component to efficiently find the most relevant data.

- Integrate with Domain-Specific Data: Set the retrieval sources to the specific domain or task to improve the relevance of the generated outputs.

- Monitor and Adjust: Regularly evaluate the performance of the RAG model and make adjustments to the retrieval sources as needed.

Fine-tuning vs. RAG: Which Should You Use?

The choice between fine-tuning and RAG depends on your specific needs and the nature of the tasks your model will perform.

When to Choose Fine-tuning

- Consistency is Key: If your task requires consistent performance in a specialized area, fine-tuning is typically the better option.

- Domain-Specific Knowledge: Fine-tuning is ideal for scenarios where the model needs to deeply understand and generate responses within a specific domain.

- Limited External Data: When reliable external data sources are not available or necessary, fine-tuning allows you to maximize the model’s existing capabilities.

When to Choose RAG

- Dynamic and Up-to-Date Information: RAG is best for tasks that require access to the latest information, such as real-time data or constantly evolving content.

- Broad Knowledge Base: If your model needs to pull from a wide array of information, RAG’s ability to retrieve relevant data from extensive sources is invaluable.

- Fact-Checking and Accuracy: When factual accuracy is critical, RAG can help ensure the model’s responses are based on the most relevant and up-to-date information by cross-referencing with an external data source.

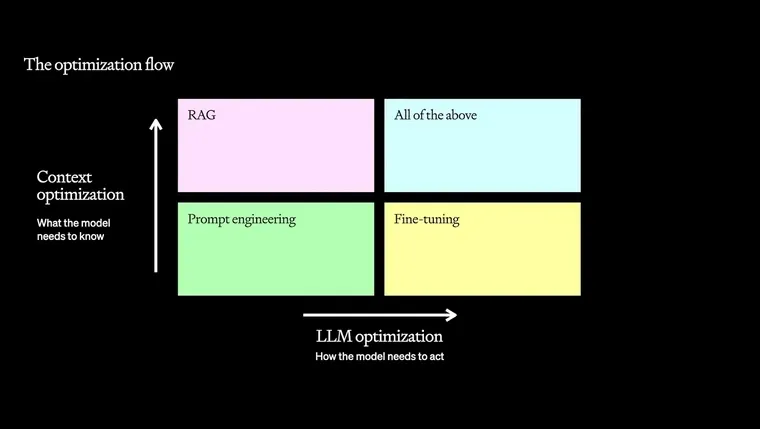

Best Practices for Optimization

Optimizing AI models always begins with prompt engineering to refine the model’s responses. Depending on your specific use case, you may then incorporate fine-tuning, RAG, or a combination of both.

-

Start with Prompt Engineering: Before considering other methods, begin with prompting and use techniques like in-context learning and establish a baseline for your use case.

-

Fine-tune for Specialized Tasks: If prompt engineering doesn’t fully meet your needs on specialized output, start fine-tuning.

-

Leverage RAG for Context: If the model needs access to company-specific information or data not included in its base training, integrate RAG.

-

Combining Fine-tuning and RAG: Fine-tuning customizes the model to produce task-specific outputs, while RAG provides access to real-time, specialized data. For example, RAG can fetch the latest information from a company’s product catalog during customer interactions, while the fine-tuned model ensures that this data is integrated into responses that’s aligned with the company’s standards.

Choosing the Right Approach

Both Fine-tuning and RAG offer powerful ways to optimize AI models, but choosing the right strategy depends on your specific needs. Fine-tuning excels in creating models that perform consistently in specialized tasks, while RAG is invaluable for tasks requiring dynamic, up-to-date information retrieval. In some cases, combining both strategies can deliver the best results.

If you want to learn more about fine-tuning your AI model, check out FinetuneDB or reach out to us to evaluate your use case.

Frequently Asked Questions

Is fine-tuning better than RAG?

Fine-tuning and RAG serve different purposes and are not directly comparable. Fine-tuning is ideal for tasks requiring consistent, high-quality performance within a specialized domain, where the model needs to understand specific nuances and terminology. RAG, on the other hand, is used when the model needs dynamic access to up-to-date or external information, making it suitable for scenarios where real-time data retrieval is essential. The best choice depends on your specific use case; sometimes, a combination of both may be the most effective approach.

What is the difference between fine-tuning, RAG, and prompt engineering?

Fine-tuning involves training a model on a specific, task-oriented dataset to enhance its performance in specialized areas. RAG augments the model by enabling it to retrieve and integrate external information in real-time, providing context and relevance that the base model may not possess. Prompt engineering focuses on crafting the input queries to optimize the model’s output without altering the model’s internal parameters or data sources. Each technique has its own use case and can be used individually or in combination depending on the project’s needs.

Can fine-tuning and RAG be used together?

Yes, fine-tuning and RAG can be combined to create a more robust AI system. Fine-tuning customizes the model to excel in specific tasks, while RAG provides access to real-time data or external information during interactions. This combination enhances the model’s ability to deliver accurate, context-aware responses, leveraging both specialized knowledge and up-to-date data.

When should I use fine-tuning over RAG?

Fine-tuning should be used when your application requires the model to consistently perform well in a specific domain, particularly where understanding and generating responses with deep, domain-specific knowledge is critical. Examples include customer support systems tailored to specific industries, legal document generation, or scenarios requiring adherence to a specialized tone or style. Fine-tuning ensures that the model is highly specialized and reliable for these tasks.

How does RAG improve the accuracy of AI models?

RAG improves the accuracy of AI models by allowing them to access and incorporate external data sources during response generation. This ensures that the model’s outputs are based on the most relevant and current information, making the responses more accurate and contextually appropriate. RAG is particularly useful in applications that require real-time data or detailed factual information that the base model might not inherently possess.

What are the main challenges in implementing RAG?

Implementing RAG involves setting up an efficient data retrieval system, ensuring that the external data sources are reliable and current, and integrating these sources with the language model in a way that enhances performance without introducing latency or inaccuracies. Maintaining data security and ensuring compliance with regulations when using external information are also significant challenges.

Is RAG more scalable than fine-tuning?

RAG can be more scalable in certain contexts because it doesn’t require retraining the model for every new task or dataset. Instead, RAG dynamically retrieves relevant information at runtime, which can be more efficient for large-scale applications. However, scalability depends on the specific requirements and infrastructure of your project.

How do I decide between fine-tuning and RAG for my AI project?

The decision between fine-tuning and RAG depends on the specific needs of your AI project. Fine-tuning is the better choice for tasks that require a deep understanding and consistent performance in a specialized area. RAG is more suitable for handling diverse queries that need real-time data integration. In some cases, combining both approaches can provide the best results, with fine-tuning ensuring specialized performance and RAG offering access to the latest information.

What tools can help me implement fine-tuning and RAG?

For fine-tuning, platforms like FinetuneDB provide comprehensive solutions for managing datasets, training models, and deploying them effectively. For RAG, integrating with external databases like Pinecone for efficient data retrieval is essential. Each tool serves a specific function, and selecting the right combination depends on your project’s requirements.