A Guide to Large Language Model Operations (LLMOps)

What is LLMOps, why is it important for businesses to have a clear LLM strategy and what are current best-practises

DATE

Wed Mar 27 2024

AUTHOR

Kolumbus Lindh

CATEGORY

Guide

What is LLMOps?

Initially, LLMs were grouped under the broader umbrella of MLOps. However, it quickly became evident that the unique challenges and requirements of LLMs needed a specialized approach. Today, over half of the leading CEOs consider AI, particularly generative AI, to be the most impactful trend in their industries. However, a gap exists for many businesses from a lack of understanding and strategy on how to effectively implement AI technologies.

Enter LLMOps: The discipline dedicated to maximizing the value of Large Language Models (LLMs) through effective training, implementation, and evaluation.

Key Takeaways

- LLMOps is a sub-devision of MLOps

- Core components of LLMOps:

- The future landscape of LLMOps

- Frequently asked questions

LLMOps vs. MLOps - Clarifying the Difference

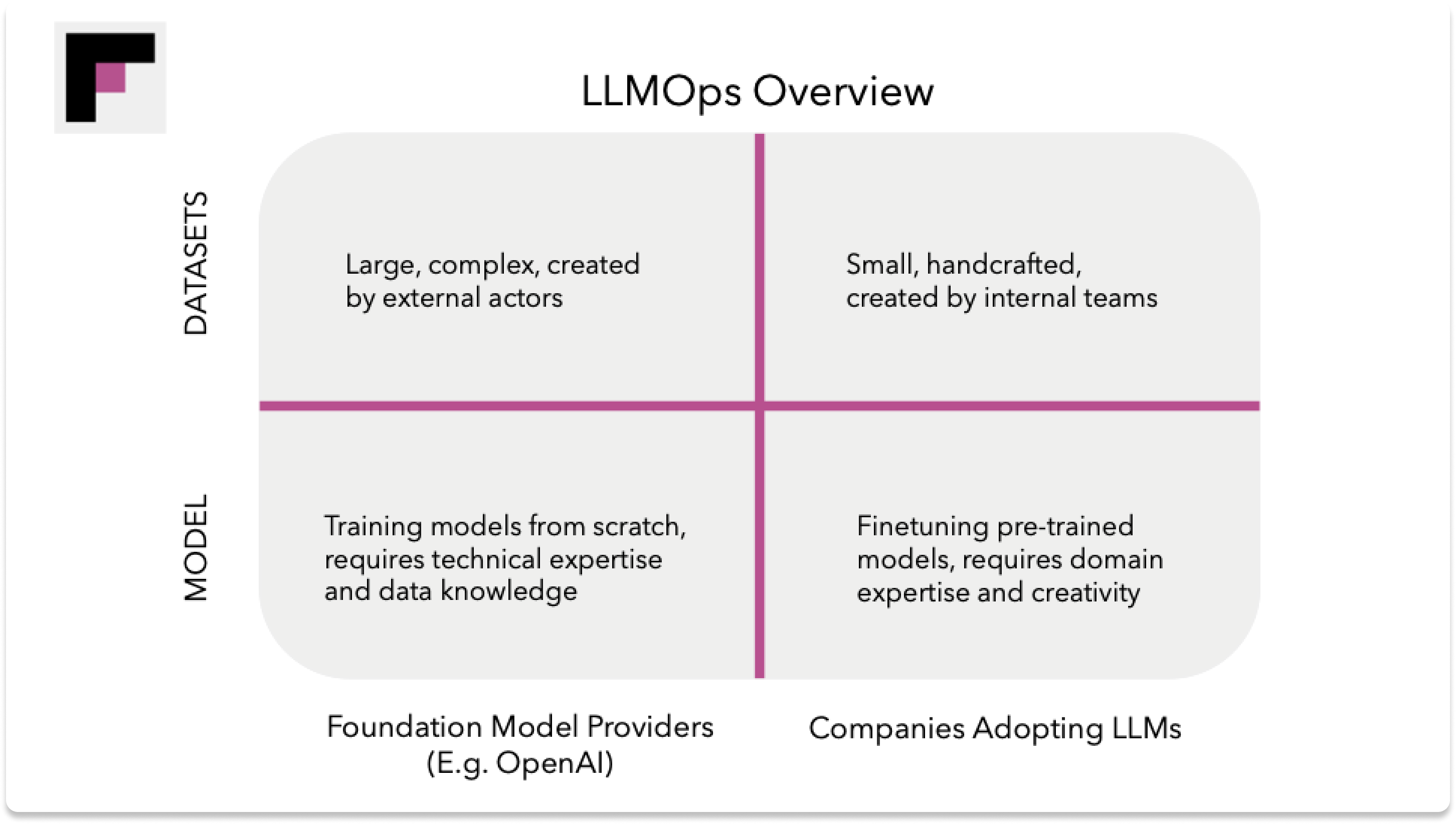

Data Management and Model Development

- MLOps focuses on extensive data preparation, involving cleaning, preprocessing, and labeling across varied datasets, and often requires developing new models from scratch, which requires a robust technical skill set.

- LLMOps primarily engages with pre-trained models, refining them for specific industry needs through fine-tuning and prompt engineering, blending technical skills with creativity, as training new LLMs is resource-intensive.

Cost Differences

- MLOps incurs significant expenses during the model development phase, including data preprocessing and the hiring of ML experts, emphasizing a more technical investment.

- LLMOps faces higher costs during model usage or inference, emphasizing the importance of optimizing for cost-efficiency due to the quick and accessible training process.

Core Components of LLMOps

LLMOps is a framework for developing, deploying, and maintaining high-performing language models. This process involves several key stages, each with its own set of considerations and best practices. The specific requirements and approaches may vary depending on the type of LLM model being used, such as proprietary models like ChatGPT or open-source models hosted in-house or on the cloud.

LLMOps overview by FinetuneDB

Development and Adaptation in LLMOps

The development and adaptation phase in LLMOps focuses on selecting and tailoring the appropriate foundation models for specific tasks. This stage involves crucial decisions between proprietary and open-source models, considering factors such as performance, cost, flexibility, and alignment with business objectives.

Model Selection

- Proprietary Models: Proprietary models, like ChatGPT, offer state-of-the-art performance and ease of use through API access. However, they come with higher costs and less flexibility compared to open-source alternatives.

- Open-Source Models: Open-source models provide a cost-effective and flexible option, allowing for in-house hosting on the cloud or on-premises. However, they may require more effort to achieve performance levels comparable to proprietary models.

Model Adaptation Techniques

- Prompt Engineering: Crafting effective prompts is crucial for guiding the model to generate desired outputs. This involves leveraging the model’s pre-existing knowledge and iteratively testing prompt phrasing to maximize response quality.

- Fine-tuning: Fine-tuning involves adapting a pre-trained model to a specific domain or task by further training it on a smaller, task-specific dataset. This allows for personalized model behavior, improving performance on niche tasks and aligning the model with core business principles.

- Leveraging External Data: Incorporating external data sources, such as databases, knowledge bases, or real-time data streams, can significantly enhance an LLM’s performance, particularly in domains where the model lacks up-to-date knowledge.

Quality Assurance with LLMOps

Before deploying an LLM, it is essential to ensure that the model meets quality standards. Evaluating the quality of an LLM is often subjective and depends on the specific use case. However, some general guidelines can be followed:

- Version Control: Compare the performance of the newly trained model against its predecessors by providing the same prompts and analyzing the responses. Assess whether the new version responds in a way that better reflects your business objectives and principles.

- Human Evaluation: Consider how you or your team would respond to the given prompts and use that as a benchmark for evaluating the AI’s performance. The AI acts as the face of your business when interacting with customers. Therefore, it’s crucial that it performs tasks in line with your anticipated standards.

If the model’s responses do not meet desired quality standards, iterate on the training process by refining prompts, fine-tuning, or incorporating additional data until satisfactory results are achieved. To learn more, check out this guide on QA and evaluation: How to Evaluate the Quality of Fine-Tuned Models

Model Deployment in LLMOps

Deploying LLMs involves considerations such as inference costs, model compression, and the choice between cloud-based or on-premises hosting.

Deployment Strategies

- Inference Cost Optimization: Inference costs can be significant, especially for models deployed at scale or in real-time applications. For proprietary models, consider the tradeoff between complexity, costs and performance that comes when opting for smarter but more expensive models such as GPT 4 instead of GPT 3.5. Older models can often achieve similar results with fine tuning as newer ones, while operating significantly faster and at lower costs.

If you are opting for open source models, the solutions are more technical, often including model compression and distillation.

Hosting Options

- Cloud-based Hosting: Cloud platforms offer scalable and flexible hosting options for LLMs. Proprietary models like ChatGPT can be accessed through API keys (meaning not requiring any hosting - OpenAI takes care of that), while open-source models can be hosted on cloud instances.

- On-premises Hosting: For organizations with strict data privacy or security requirements, hosting LLMs on-premises may be preferred. This approach requires more infrastructure setup and maintenance but provides greater control over the model and data.

Monitoring and Refinement with LLMOps

Continuously monitoring and refining LLMs post-deployment is crucial for maintaining their accuracy, relevance, and alignment with evolving requirements.

Monitoring

- Performance Tracking: Regularly monitor the model’s performance in production, tracking key metrics and identifying any degradation over time.

- Model Drift Detection: Monitor for changes in input data patterns or external contexts that may lead to decreased model effectiveness.

- User Feedback: Collect and analyze user feedback to identify areas for improvement and gather insights on real-world performance. Monitoring LLM Production Data: The Key to Understanding Your Model’s Behavior

Refinement

- Retraining and Updating: Regularly update the model by retraining on new data, fine-tuning, or adjusting prompts and external data sources based on monitoring insights and user feedback.

- Addressing Edge Cases: Identify and address edge cases or rare scenarios where the model may struggle, improving its robustness and performance in diverse situations.

- Continuous Improvement: Iterate on the model’s performance through a continuous cycle of monitoring, refinement, and redeployment to ensure ongoing enhancement and alignment with business objectives.

By following this structured approach to LLMOps, organizations can effectively develop, deploy, and maintain high-performing language models that deliver value and adapt to real-world challenges. The specific implementation details may vary depending on the chosen LLM model and deployment scenario, but the core principles of development, adaptation, quality assurance, deployment, monitoring, and refinement remain essential for successful LLMOps.

The Future Landscape of LLMOps

As AI continues to advance at a rapid pace, LLMOps is poised to evolve alongside it. Some key trends and predictions for the future of LLMOps include:

-

Convergence with MLOps: While LLMOps has emerged as a distinct discipline, there is potential for convergence with traditional MLOps practices. As LLMs become more integrated with other AI systems, a unified approach to AI operations may become necessary.

-

Democratization of LLMs: The increasing availability of open-source LLMs and the development of more user-friendly tools for fine-tuning and deployment will make LLMs more accessible to a broader range of businesses and developers.

-

Emphasis on Responsible AI: As LLMs become more powerful and widely used, ensuring their responsible development and deployment will be a central focus of LLMOps. This will involve addressing issues of bias, transparency, and accountability.

-

Integration with Other Technologies: LLMs will likely be increasingly integrated with other technologies, such as computer vision, speech recognition, and robotics, enabling more sophisticated and multi-modal AI applications.

FinetuneDB Simplifying LLMOps

By focusing on key aspects like development, deployment, and continuous improvement, companies can ensure their LLMs deliver value and align with business objectives. FinetuneDB simplifies the LLMOps process, enabling businesses to optimize their LLM’s performance without deep technical expertise.

Frequently Asked Questions

What is LLMOps?

LLMOps, or Large Language Model Operations, focuses on maximizing the value of Large Language Models through effective training, implementation, and evaluation. It’s crucial for businesses leveraging AI to enhance operations and customer engagement.

How are businesses using LLMs?

Businesses use LLMs for chatbots, content generation, code assistance, and language translation to improve customer service and operational efficiency.

LLMOps vs MLOps: What’s the difference?

LLMOps specializes in large language models, emphasizing fine-tuning and prompt engineering, while MLOps deals with a broader range of machine learning operations, including data preparation and model training.

Key components of LLMOps?

Key components include development and adaptation of models, quality assurance, deployment strategies, and continuous monitoring and refinement post-deployment.

How to ensure LLM quality?

Ensure LLM quality through version control, human evaluation, and iterative refinement based on these assessments.

Future trends in LLMOps?

Trends include convergence with MLOps, democratization of LLMs, emphasis on responsible AI, and integration with other technologies.

Importance of deployment strategies in LLMOps?

Deployment strategies are vital for managing costs, performance, and deciding between cloud-based or on-premises hosting, impacting the model’s efficiency and alignment with business goals.

How does LLMOps drive business competitiveness?

LLMOps enables the effective use of LLMs, ensuring they are well-developed, deployed, and maintained, enhancing customer experiences and keeping businesses competitive in the AI era.