How to Fine-tune GPT-3.5 for Email Writing Style

Learn how to fine-tune GPT-3.5 to create a custom AI model that generates emails in your writing style.

DATE

Tue May 21 2024

AUTHOR

Felix Wunderlich

CATEGORY

Guide

Key Takeaways

This guide covers the process of creating a custom fine-tuned GPT-3.5 model for your email writing style using the FinetuneDB platform. Steps include dataset preparation, model training, testing, deployment, and ongoing evaluation.

- Dataset Preparation: Collect and structure high-quality outreach emails with consistent formatting. Start with at least 10 examples.

- Model Training: Upload your dataset to FinetuneDB and train with OpenAI. Duration and cost vary by dataset size.

- Testing: Evaluate the fine-tuned model with new scenarios, ensuring optimal performance and making necessary adjustments.

- Deployment: Integrate the AI into your email workflow, maintaining your tone of voice and monitoring performance.

- Ongoing Evaluation: Regularly assess performance, gather feedback, and refine the model to improve its capabilities.

Step 1: Collect and Prepare Fine-tuning Datasets

To fine-tune GPT-3.5 effectively, start with a well-prepared dataset that mirrors your desired email communication style. In this case we’re collecting high performing sales outreach emails.

- Gather Examples: Collect high-quality examples of your best outreach emails.

- Structure Data: Each dataset entry should include:

- System: Role that the model is taking on (stays the same)

- Input: Company name and description.

- Output: Email subject line and body text.

- Consistency: Maintain consistent formatting across all examples.

- Quality Over Quantity: Focus on quality; start with at least 10 well-crafted examples.

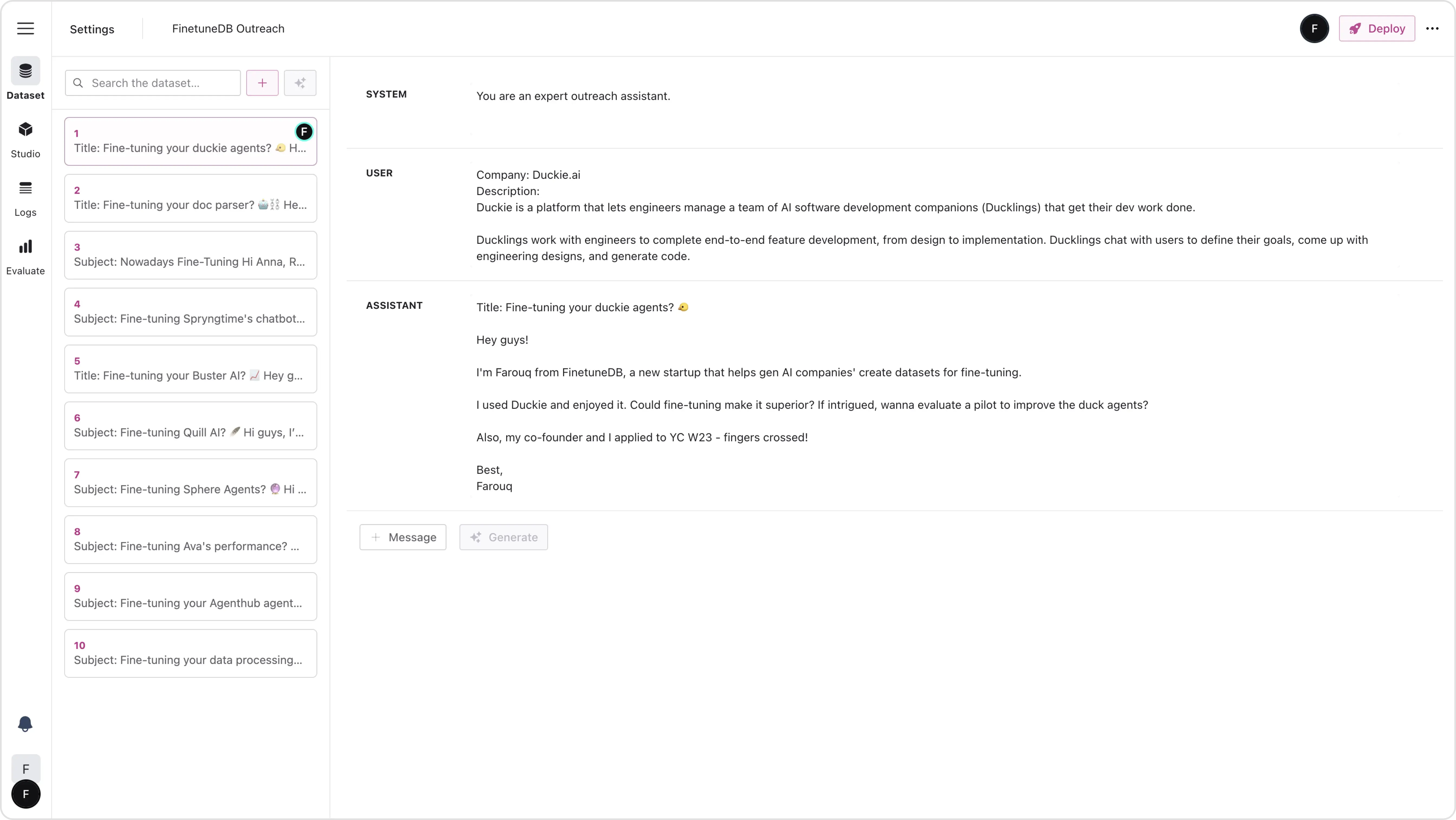

Below is an example dataset entry:

You are an expert outreach assistant.

Company: Duckie.ai

Description:

Duckie is a platform that lets engineers manage a team of AI software development companions (Ducklings) that get their dev work done. Ducklings work with engineers to complete end-to-end feature development, from design to implementation. Ducklings chat with users to define their goals, come up with engineering designs, and generate code.

Title: Fine-tuning your duckie agents? 🐤

Hey guys!

I’m Farouq from FinetuneDB, a new startup that helps gen AI companies’ create datasets for fine-tuning.

I used Duckie and enjoyed it. Could fine-tuning make it superior? If intrigued, wanna evaluate a pilot to improve the duck agents?

Best,

Farouq

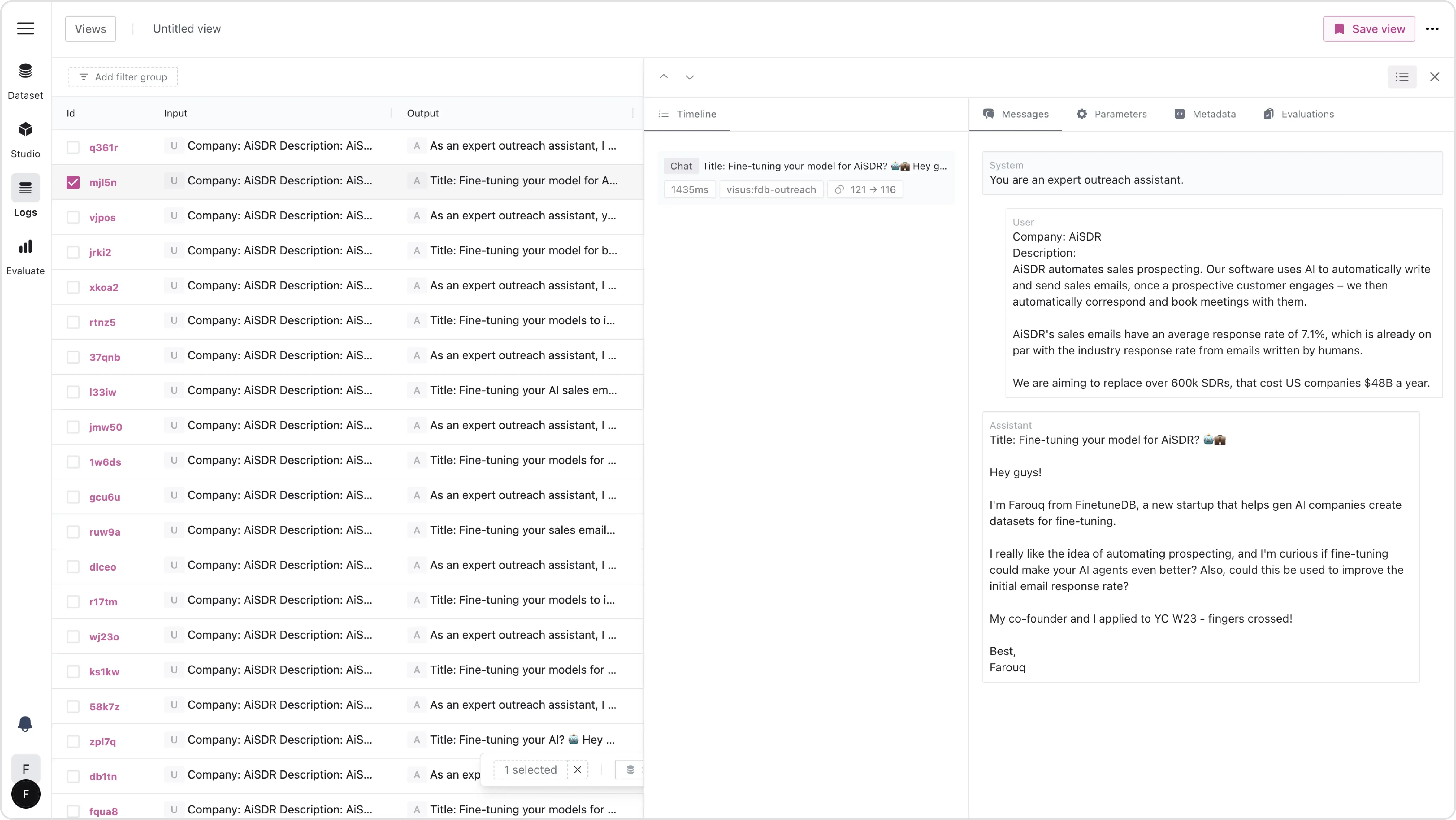

Now create each of these example pairs on the FinetuneDB platform, as seen below.

Preparing the dataset

Collecting and preparing your datasets is the first and most critical step in the fine-tuning process. The quality of the examples you gather will directly influence the effectiveness of the output. Begin by identifying your top-performing outreach emails. These should be examples where the recipient responded positively or engaged in the desired action.

For each email, structure your data by breaking it down into inputs and outputs. The input includes the company’s name and a brief description, while the output would be the email’s subject line and body text. Consistency in formatting is key; it ensures that the model learns the patterns in your writing style effectively. Even though you might start with as few as 10 examples, make sure these are of the highest quality, reflecting your best communication practices. You can create each dataset entry directly on the FinetuneDB platform.

Step 2: Model Training and Costs

Training your fine-tuned model transforms your dataset into an AI outreach email writing assistant.

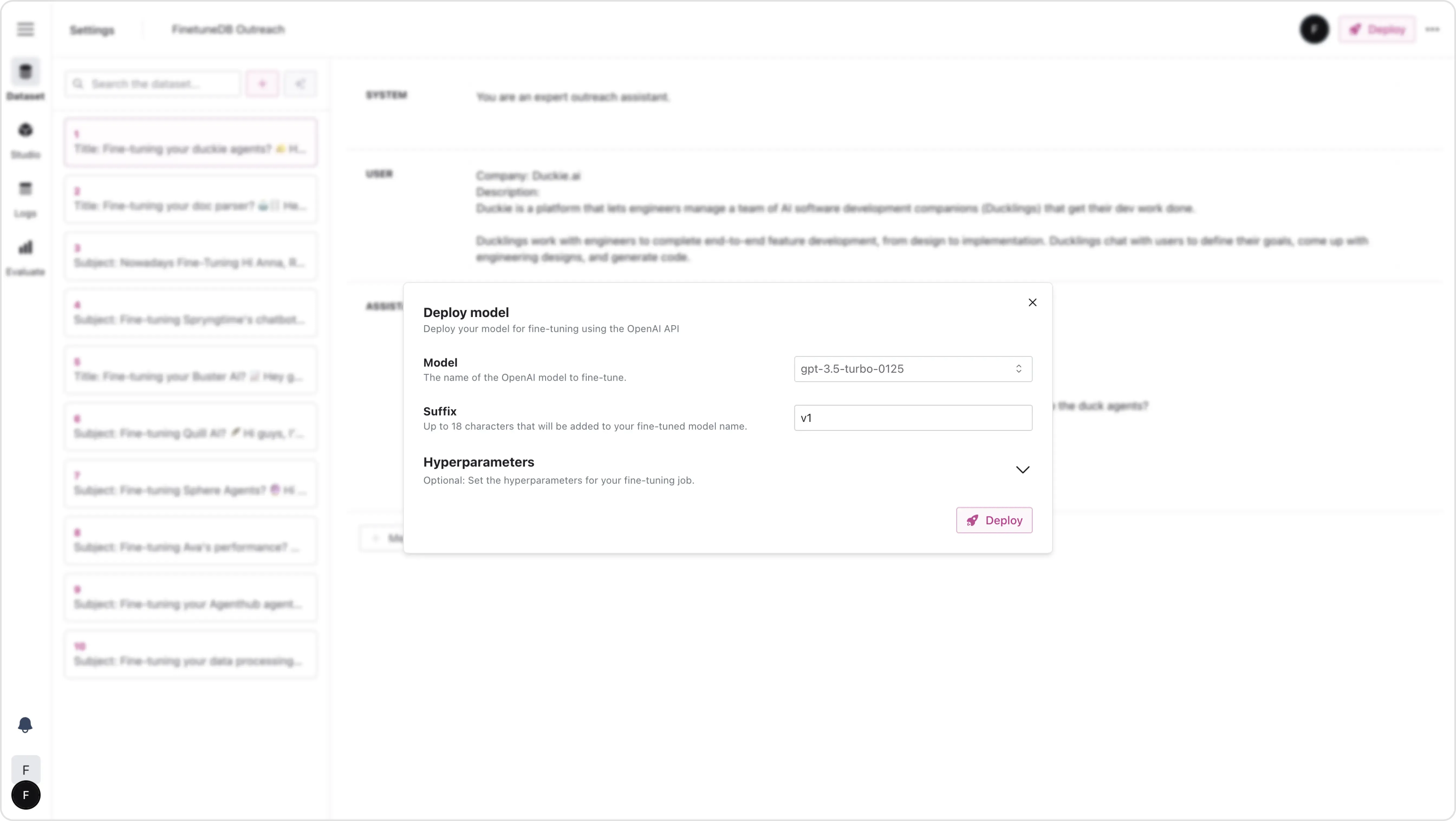

- Upload Dataset: Use the FinetuneDB platform to upload your dataset to OpenAI.

- Training Duration: Duration varies with dataset size and complexity.

- Small datasets: ~10 minutes.

- Larger datasets: Several hours.

- Cost: Depends on the size and complexity of your dataset. Refer to the pricing guide for details.

Training GPT-3.5 with the dataset

Training your model is the step where your dataset comes to life. You can deploy the dataset directly to OpenAI with one click via FinetuneDB. The training process duration depends largely on the dataset’s size and complexity. Smaller datasets, consisting of around 10 examples, may only take about 10 minutes to process. However, larger and more complex datasets can take several hours.

The cost of training can also vary. It’s a good idea to consult the pricing guide to understand the potential expenses based on your specific needs. This will help you budget appropriately and ensure you’re getting the most value from the fine-tuning process. Generally speaking, fine-tuning on a small dataset is very cheap, no more than a couple of dollars.

Step 3: Testing Your Fine-tuned Model

Testing ensures that the AI meets your specific requirements before it is fully deployed.

- Use New Prompts: Test the AI with new scenarios not included in the training dataset.

- Evaluate Responses: Ensure the AI’s outputs meet your expectations.

- Adjust as Needed: Make necessary tweaks for optimal performance.

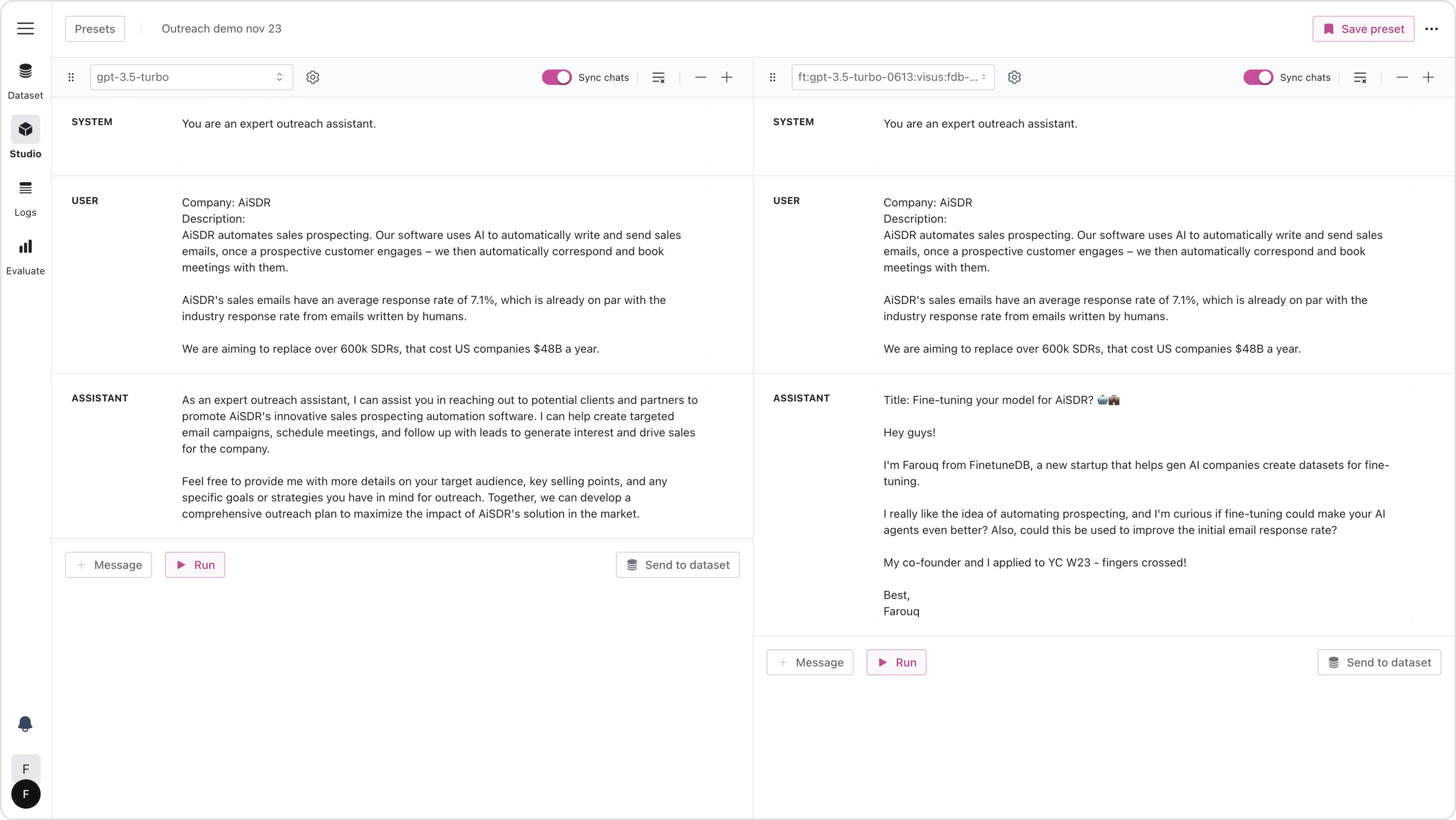

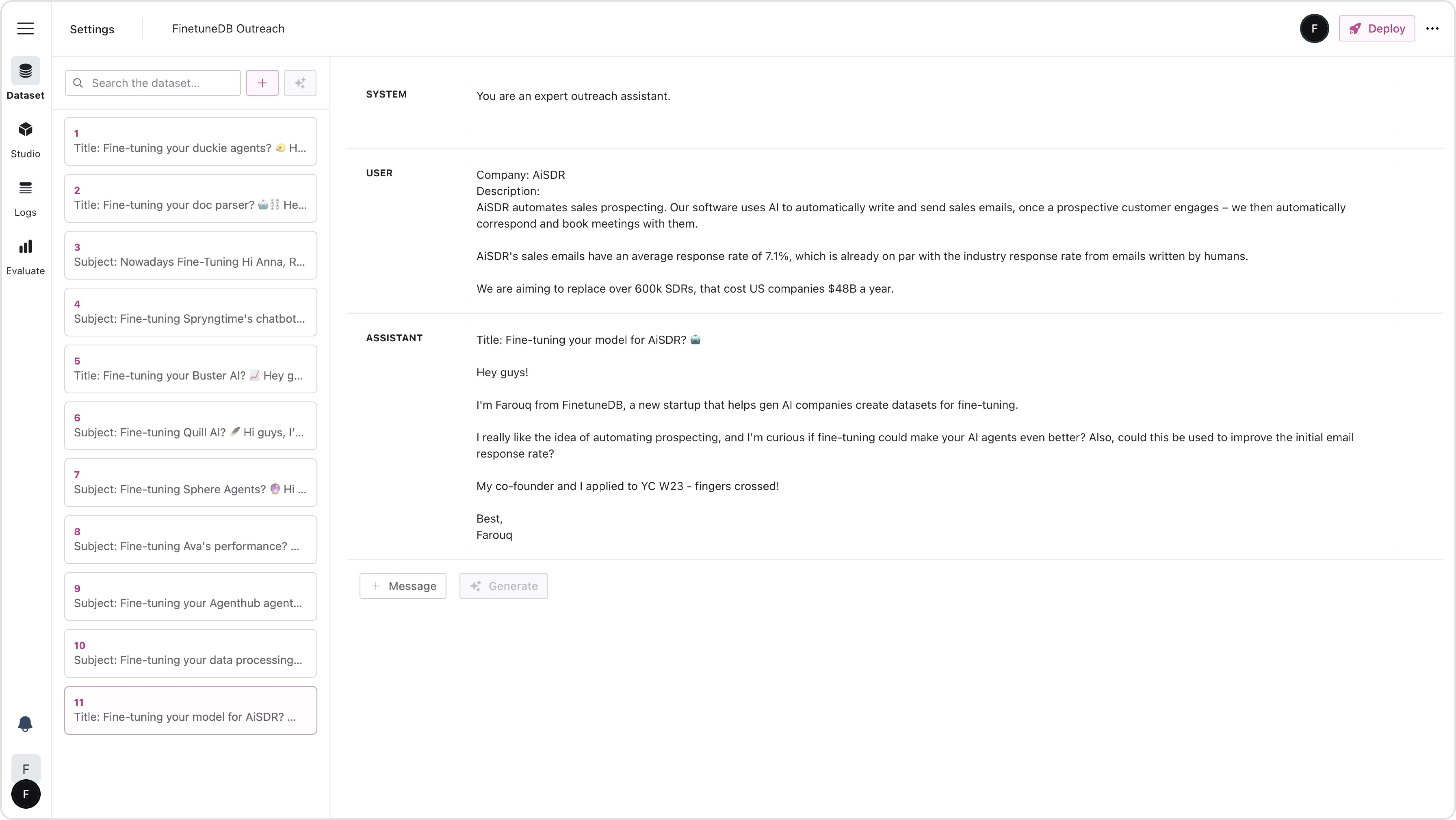

Testing the fine-tuned model in the studio

After training, it’s essential to test your model to ensure it performs as expected. Use prompts that were not part of the training dataset to evaluate the AI’s responses. This testing phase is crucial because it helps you understand how well the model has learned your communication style.

Pay close attention to the AI’s responses. Are they coherent? Do they align with your intended tone and style? If you notice discrepancies, this is the time to make adjustments. Fine-tuning is often an iterative process, requiring several rounds of testing and tweaking to achieve optimal performance.

Step 4: Deploying Your Fine-tuned AI Email Writer

Once testing is complete, you can integrate the AI into your email outreach workflow.

- Incorporate AI: Integrate the fine-tuned AI into your daily email workflow.

- Maintain Tone of Voice: Ensure the AI-generated emails reflect your voice.

- Monitor Performance: Continuously monitor the AI’s performance and make adjustments as needed.

Monitoring the fine-tuned model in the log viewer

Continuous monitoring is essential after deployment. Every output generation is tracked in your log viewer. Keep an eye on how the AI is performing in actual use.

Once the fine-tuned model is working well, you can start using it in your daily operations. This step is where the AI starts to assist in real-world scenarios, in this case automating composing emails. You can either deeply integrate the model into an email client, or just generate the outreach emails in the FinetuneDB studio environment, where you previously tested the model responses.

Step 5: Ongoing Evaluation and Continuous Fine-tuning

To keep the AI effective and aligned with your goals, continuous evaluation and refinement are necessary.

- Evaluate Performance: Regularly assess the AI’s performance in real-world scenarios.

- Collect Feedback: Gather and incorporate human feedback for continuous improvement.

- Refine Model: Use the feedback to refine the dataset and improve the AI’s capabilities.

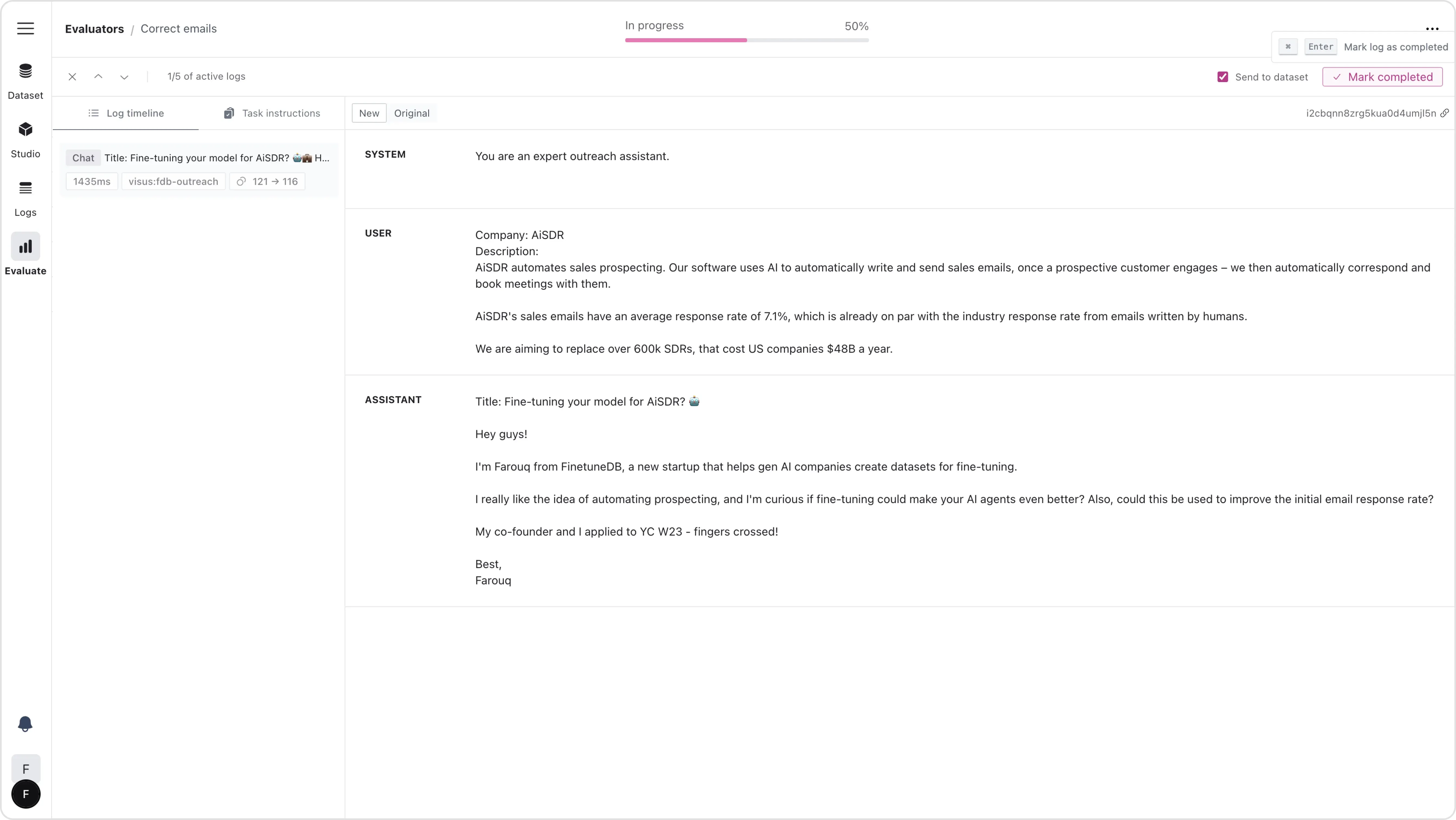

Evaluate fine-tuned model outputs in the evaluation workflow

Regular evaluation is key to maintaining the effectiveness of your AI email writer. Test the AI’s performance in various real-world scenarios and gather feedback from users, or incorporate your own feedback if this is only used by yourself. This feedback is invaluable as it provides insights into how well the AI is meeting your communication needs.

New dataset entry from evaluation workflow

Incorporate this feedback into your dataset and retrain the model as needed. This continuous loop of evaluation and refinement ensures that the AI adapts to any changes in your communication style and remains a valuable tool in your outreach efforts.

Frequently Asked Questions: Fine-tuning GPT-3.5 for Email Writing

Why should I fine-tune GPT-3.5 for email writing?

Fine-tuning GPT-3.5 for email writing helps create personalized and effective outreach emails that match your specific communication style, leading to improved engagement and response rates.

How do I start preparing a dataset for fine-tuning?

Begin by gathering high-quality examples of your best outreach emails. Structure the data consistently with inputs (e.g., company name, description) and outputs (e.g., email subject line, body text). Quality is more important than quantity; start with at least 10 well-crafted examples.

What platform should I use for fine-tuning GPT-3.5?

The FinetuneDB platform is recommended for its user-friendly interface and seamless integration with OpenAI’s tools and other open-source models. It allows you to create and upload datasets, train models, and monitor performance effectively.

How long does the training process take?

Training duration depends on your dataset’s size and complexity. Small datasets (~10 examples) may take around 10 minutes, while larger datasets can take several hours.

What are the costs associated with fine-tuning GPT-3.5?

The cost varies based on the dataset size and complexity. Refer to FinetuneDB’s pricing guide for detailed information. Generally, fine-tuning with a small dataset is affordable, costing no more than a few dollars.

How do I test the fine-tuned model?

After training, test the AI with new prompts not included in the training dataset. Evaluate the responses to ensure they meet your expectations and adjust the model as needed to improve performance.

How can I deploy the fine-tuned model in my workflow?

Integrate the fine-tuned AI into your daily email workflow. You can use it directly in an email client or within the FinetuneDB studio environment to generate outreach emails.

What is the importance of ongoing evaluation and continuous fine-tuning?

Regular evaluation and refinement keep the AI aligned with your communication goals. Gather feedback from real-world use, incorporate it into your dataset, and retrain the model to adapt to changes and improve effectiveness.

Can I fine-tune GPT-3.5 for other applications besides email writing?

Yes, GPT-3.5 can be fine-tuned for various applications, including customer service, content creation, and more. The process involves preparing a relevant dataset and following similar training, testing, and deployment steps.

Where can I find more resources on fine-tuning AI models?

Visit the FinetuneDB blog for comprehensive guides and resources on fine-tuning AI models, including detailed explanations of datasets, training processes, cost considerations, and best practices.