What is Fine-tuning? Simply Explained

Learn about fine-tuning in AI, its impact on Large Language Models, and how it can be used for cost savings,

DATE

Tue Oct 31 2023

AUTHOR

Felix Wunderlich

CATEGORY

Guide

What is Fine-tuning in AI

Fine-tuning in AI involves refining a pre-trained model to perform specific tasks more effectively. Initially, models like GPT (Generative Pre-trained Transformer) learn from extensive datasets to grasp general language patterns. However, to specialize in particular areas or to adapt to unique requirements, fine-tuning is necessary. This process uses a smaller, more focused fine-tuning datasets relevant to a specific tasks, allowing the model to enhance its accuracy and efficiency in specific contexts.

Imagine fine-tuning as creating a tailored training plan for an athlete, to make sure they’re prepped and primed for the specific challenges ahead.

Why is Fine-tuning Important

Fine-tuning is essential in AI for several strategic reasons, primarily focusing on improving efficiency, effectiveness, and specific task performance:

- Cost Efficiency: Fine-tuning smaller models for specific tasks can be highly cost-effective compared to operating larger, more generalized models.

- Speed and Time Savings: Models that are fine-tuned on specific datasets are typically much faster at performing specific tasks.

- Enhanced Performance for Specific Tasks: Fine-tuning sharpens a model’s ability to handle particular domains or tasks with greater precision and relevance.

- Business Application: Beyond technical benefits, fine-tuning facilitates more nuanced applications such as aligning the model’s outputs with a company’s brand voice, or other custom use cases.

Fine-tuning vs. RAG: Key Differences in LLM Optimization

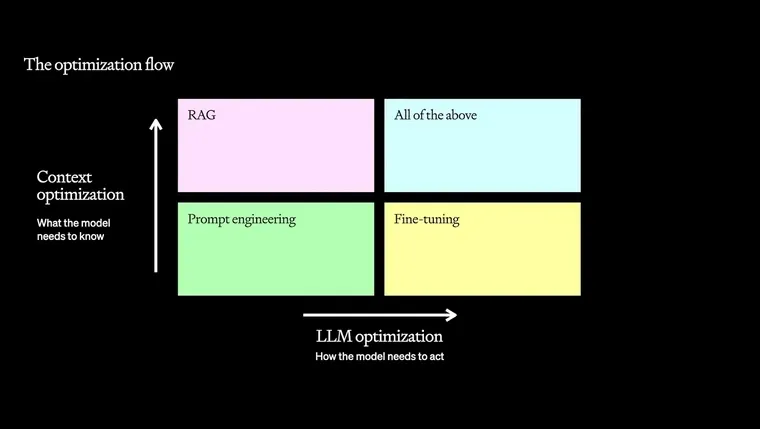

Retrieval-Augmented Generation (RAG) and fine-tuning are two complimentary approaches within the customization flow for Large Language Models (LLMs), each serving unique roles in improving model performance. Together with prompt engineering these are the techniques to customize LLMs.

RAG

Focuses on context optimization, which involves enhancing what the model needs to know. It does this by integrating external knowledge retrieval into the generation process, allowing the model to fetch and utilize relevant information from a vast corpus of data in real-time.

Fine-Tuning

Emphasizes how the model needs to act. It involves training a pre-existing model on a specific, often smaller, dataset tailored to specific needs or tasks. This process refines the model’s responses, making them more suitable for particular applications by adjusting the weights within the model based on the training examples provided.

Fine-tuning vs. RAG

What are Fine-tuning Datasets

Datasets are central to the fine-tuning process. They mold the behavior and performance of the LLM. A well-curated dataset for fine-tuning is essential for molding the model just right. In the next article, we’ll dive deeper into the world of datasets and their crucial role in fine-tuning.

How Much does Fine-tuning Cost

Fine-tuning a model like GPT-3.5 does come with some costs, but think of it as a smart investment in your project’s future. Essentially, you’re paying a small amount to teach the model exactly what it needs to know for your specific tasks. This upfront investment leads to a model that’s not only faster and more accurate but also more cost-effective in the long run. For a clear picture of what these costs look like and how they translate into savings, check out our detailed guide.

How to Fine-tune LLMs Step-by-step Guide

-

Format Your Data: Prepare your dataset in a format that the language model can process. The standard format for many models, including GPT models, is JSONL. In this format, each line of the file represents a separate data entry. Each entry typically includes a prompt and the expected response, formatted in a JSON object.

-

Upload the Dataset: Once your dataset is ready and formatted correctly, the next step is to upload it to the environment where the model training will occur. This could involve uploading the JSONL file to a cloud storage service or directly to the training platform, depending on the tools and infrastructure you are using.

-

Configure the Fine-Tuning Parameters: Before starting the training, you’ll need to configure various parameters that control the fine-tuning process. This includes setting the learning rate, the number of epochs (how many times the model should iterate over the entire dataset), and other model-specific settings that can affect the performance and outcomes of the fine-tuning.

-

Start the Training Process: With the dataset uploaded and parameters set, you can begin the fine-tuning process. During this stage, the language model learns from the provided examples, adjusting its internal weights and biases to better predict the desired outputs based on the inputs it receives.

-

Monitor the Training Progress: It’s important to keep an eye on the training as it progresses. Monitoring tools can help you track metrics like loss and accuracy, providing insights into how well the model is adapting to the new data. Adjustments might be needed if the model isn’t performing as expected.

-

Evaluate the Model: After the training is complete, it’s important to monitor and evaluate the model’s performance to ensure it has learned effectively from the dataset. This typically involves testing the model with a separate validation dataset and human domain experts to see how it performs with data it hasn’t seen during training.

-

Iterate and Improve: Fine-tuning is an iterative process. Based on the evaluation, you might need to make adjustments to the dataset, fine-tuning parameters, or even the model architecture. This step may be repeated several times to achieve the best results.

Streamlining Fine-tuning with FinetuneDB

FinetuneDB streamlines the fine-tuning process by offering an intuitive platform for creating, managing datasets, and seamlessly integrating with model providers. It simplifies dataset preparation in the required formats and facilitates easy uploads to model providers for training. FinetuneDB also provides evaluation frameworks to continuously monitor and improve model performance, making sure that your fine-tuned models are both effective and efficient.

Frequently Asked Questions

What exactly is fine-tuning in AI?

Fine-tuning in AI refers to the process of training a pre-existing, general-purpose model on a specific, smaller dataset to enhance its performance on particular tasks or domains.

Why is fine-tuning essential for AI models?

Fine-tuning improves the efficiency, accuracy, and speed of AI models, making them more cost-effective and better suited to specific applications such as understanding specialized terminologies or aligning with a company’s brand voice.

How does fine-tuning differ from training a model from scratch?

Fine-tuning modifies an already trained model for specific improvements, whereas training from scratch builds a model’s abilities from the ground up. Fine-tuning is typically faster and less resource-intensive due to leveraging pre-learned patterns.

What are fine-tuning datasets?

Fine-tuning datasets are specially curated collections of data that include examples directly relevant to the specific tasks the model is expected to perform. These datasets are essential for effectively customizing the model’s responses.

What is the difference between fine-tuning and RAG?

Fine-tuning adjusts a model’s parameters to improve performance on specific tasks, focusing on how the model reacts to certain data. RAG, on the other hand, enhances the model’s responses by dynamically retrieving external information relevant to the query at hand.

What are the cost of fine-tuning?

Fine-tuning involves costs related to processing the specific datasets and the computational resources for retraining the model. However, it is generally more cost-effective than developing a new model due to using smaller, more targeted datasets.

How long does fine-tuning take?

The duration of fine-tuning can vary based on the size of the dataset and the complexity of the tasks but is generally quicker than full model training due to starting with a pre-trained base.

Can fine-tuning introduce biases?

Yes, if the fine-tuning dataset is not diverse or balanced, it can introduce or perpetuate biases. Careful dataset curation is crucial to minimize this risk.

How do you evaluate the effectiveness of fine-tuning?

Effectiveness is evaluated by comparing the model’s performance on a validation set before and after fine-tuning. Key metrics include accuracy, precision, and how well the model meets the specific needs of its application.

How does FinetuneDB facilitate fine-tuning?

FinetuneDB offers a platform that streamlines creating, managing, and deploying fine-tuned models. It simplifies the preparation of datasets and integrates seamlessly with model training and evaluation processes.